A friend asked, does 100-channel monitoring need to use a core switch? When we understand whether we want to use the core to change the machine, we first understand the role of the core switch.

First, the role of each layer of switches

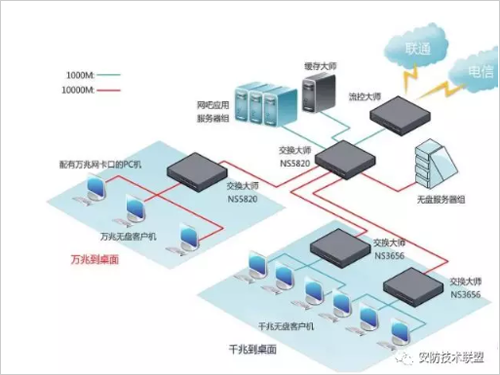

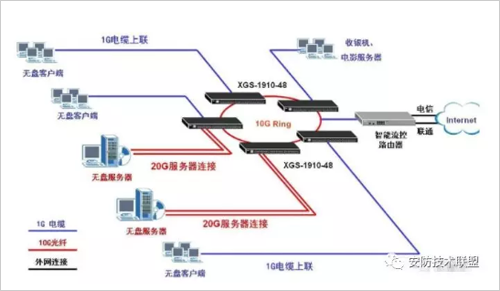

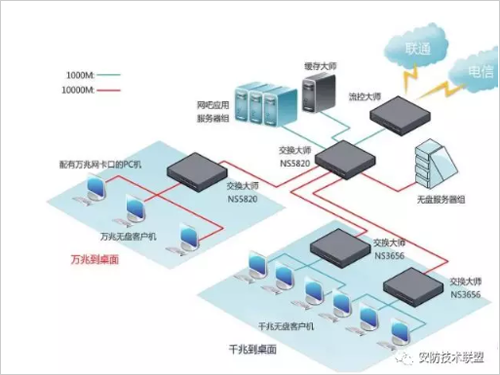

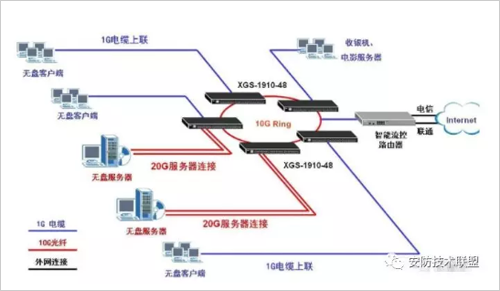

Access layer: The access layer connects directly to the user's computer and allows various network resources to access the network. It provides users with the ability to access the application system on the local network segment, mainly to address the need for mutual access between neighboring users, and to provide sufficient bandwidth for these accesses.

Convergence layer: The “intermediary†of the network access layer and the core layer is to aggregate before the workstation accesses the core layer to reduce the load on the core layer device. The aggregation layer has various functions such as implementation policy, security, work group access, routing between virtual local area networks (VLANs), source address or destination address filtering.

Core layer: The main purpose is to provide fast and reliable backbone data exchange through high-speed forwarding communication.

Second, why use core switches

Basically, the core switch is not required under 50 channels, the second layer switch plus router can be used, and the 100-channel or so will use the efficient routing function of the core switch.

First of all, the 100-channel monitoring belongs to a medium-sized network. His network is under little pressure and is not small. There may be data delays at any time.

The core switches are generally three-layer switches (one of the main functions of the core switch is VLAN routing, so the core switch will require a Layer 3 switch).

1. If the core switch is not used, all monitoring is in one subnet, and a broadcast storm may be formed to make the entire network paralyzed, and the security is also poor.

2. The three-layer core switch implements the IP routing function by using the hardware switching mechanism, and the optimized routing software improves the routing process efficiency, and solves the problem of the speed of the traditional router software routing. As mentioned above, the three-layer core switch also has an important role to connect to the subnet while ensuring high speed and efficiency.

100-channel monitoring, in order to reduce the number of computers in the same network can not be too large. It is inevitable that you will need to divide vlan, so you need to further divide many IP subnets to prevent broadcast storms. The task between the subnets also depends on the "mainstay" of the three-layer switch.

3. The three-layer core switch has an expandable line. The three-layer switch is connected to multiple subnets. The subnet only establishes a logical connection with the third-layer switching module. If you need to add network equipment, because you have reserved various expansion module interfaces, you can directly expand the equipment without modifying the original network layout and the original equipment, thus protecting the original investment.

High security is also an important aspect of the attractiveness of Layer 3 switches. The network layer of the Layer 3 switch at the core is definitely the object of network hacking. In the software aspect, a highly reliable firewall can be configured to block unidentified packets. And you can access the list, you can restrict internal users to access some special IP addresses by setting the access list.

Third , what is the core switch

The core switch is not a type of switch, but the switch placed at the core layer (the backbone part of the network) is called the core switch. Generally, large enterprise networks and Internet cafes need to purchase core switches to achieve powerful network expansion capabilities to protect the original. Investing, if the computer reaches a certain number, the core switch will be used, but the core switch is not needed under the basic 50, and there is a router. The so-called core switch is for the network architecture, if it is a small LAN of several computers. An 8-port small switch can be called a core switch! In the network industry, the core switch refers to a network management function, a powerful 2 or 3 layer switch, and a network with more than 100 computers. If you want to run stably and at high speed, the core switch is indispensable.

Fourth, what is the difference between the core switch and the ordinary switch?

1, the difference between the ports

The number of common switch ports is generally 24-48. Most of the network ports are Gigabit Ethernet or 100M Ethernet ports. The main function is to access user data or aggregate switch data of some access layers. Configure Vlan simple routing protocol and some simple SNMP functions. The backplane bandwidth is relatively small.

The number of core switch ports is large, usually modular, and can be freely matched with optical ports and Gigabit Ethernet ports. Generally, the core switches are Layer 3 switches, and various advanced network protocols such as routing protocol/ACL/QoS/load balancing can be set. The main point is that the backplane bandwidth of the core switch is much higher than that of the ordinary switch, and usually has a separate engine module and is the primary backup.

2, the difference between users connected or access to the network

The part of the network that directly connects to the user or accesses the network is usually called the access layer. The part between the access layer and the core layer is called the distribution layer or the aggregation layer. The access layer is designed to allow the end user to connect to the network. Therefore, the access layer switch has low cost and high port density characteristics. The aggregation layer switch is the aggregation point of multiple access layer switches. It must be able to handle all traffic from the access layer devices and provide uplinks to the core layer, so the aggregation layer switches have higher performance and less. Interface and higher exchange rate.

The core part of the network is called the core layer. The main purpose of the core layer is to provide an optimized and reliable backbone transmission structure through high-speed forwarding communication. Therefore, the core layer switch application has higher reliability, performance and throughput.

Compared with ordinary switches, data center switches need to have features such as large cache, high capacity, virtualization, FCOE, and Layer 2 TRILL technology.

1, large cache technology

The data center switch changes the outbound port caching mode of the traditional switching system. The distributed cache architecture uses a larger cache than the ordinary switch. The cache capacity can reach more than 1G, and the general switch can only reach 2~4M. For each port, the burst traffic buffering capacity of 200 milliseconds can be achieved under the condition of 10 Gbits full line rate. Therefore, in the case of bursty traffic, the large cache can still guarantee zero packet loss on the network, which is just suitable for the data center server. The characteristics of large flow.

2, high-capacity equipment

The network traffic of the data center has the characteristics of high-density application scheduling and surge burst buffering. However, the general switch meets the interconnection and intercommunication as the main purpose, and cannot accurately identify and control the service, and cannot respond quickly in large business situations. And zero packet loss, can not guarantee the continuity of the service, the reliability of the system mainly depends on the reliability of the device.

Therefore, ordinary switches cannot meet the needs of data centers. Data center switches need to have high-capacity forwarding characteristics. Data center switches must support high-density 10 Gigabit boards, that is, 48-port 10 Gigabit boards, so that 48-port 10 Gigabit boards can be fully line-speed. Forwarding, data center switches can only use the CLOS distributed switching architecture. In addition, with the popularity of 40G and 100G, the support of 8-port 40G board and 4-port 100G board is also gradually commercialized. Data center switch 40G, 100G boards have already entered the market, thus meeting the high density of data centers. Application requirements.

3, virtualization technology

Data center network equipment needs to be highly manageable and highly secure. Therefore, data center switches also need to support virtualization. Virtualization is to transform physical resources into logically manageable resources to break the physical structure. Barriers, the virtualization of network equipment mainly includes multiple virtual ones, one virtual multi-technology, multiple virtual and other technologies.

Through virtualization technology, multiple network devices can be managed in a unified manner, and services on one device can be completely isolated, thereby reducing data center management costs by 40% and increasing IT utilization by approximately 25%.

4, TRILL technology

In the construction of the Layer 2 network in the data center, the original standard is the STP protocol, but its drawbacks are as follows: STP works by port blocking, and all redundant links do not forward data, resulting in waste of bandwidth resources. There is only one spanning tree in the STP network. Data packets must be transmitted after the root bridge transits, which affects the forwarding efficiency of the entire network.

Therefore, STP will no longer be suitable for the expansion of very large data centers. TRILL is generated in response to these shortcomings of STP. It is a technology generated for data center applications. The TRILL protocol combines Layer 2 configuration and flexibility with Layer 3 and The scales are effectively combined. If the second layer does not need to be configured, the whole network can be forwarded without loops. TRILL technology is the basic feature of the second layer of the data center switch, which is not available in ordinary switches.

5, FCOE technology

Traditional data centers often have a data network and a storage network, and the convergence of the new generation of data center networks is becoming more and more obvious. The emergence of FCOE technology makes network convergence possible. FCOE encapsulates the data frames of the storage network. A technique for forwarding within an Ethernet frame. The implementation of this convergence technology must be on the switch of the data center, the general switch generally does not support the FCOE function.

The above several network technologies are not available in ordinary switches, and are the main technologies of data center switches, and are network technologies for next-generation data centers and even cloud data centers. With these new network technologies, the data center has been rapidly developed.

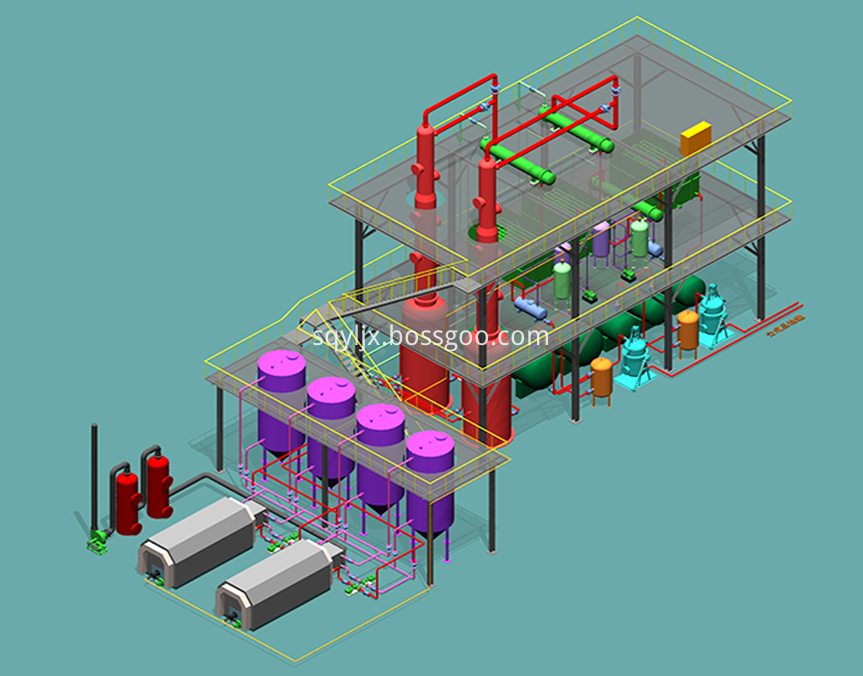

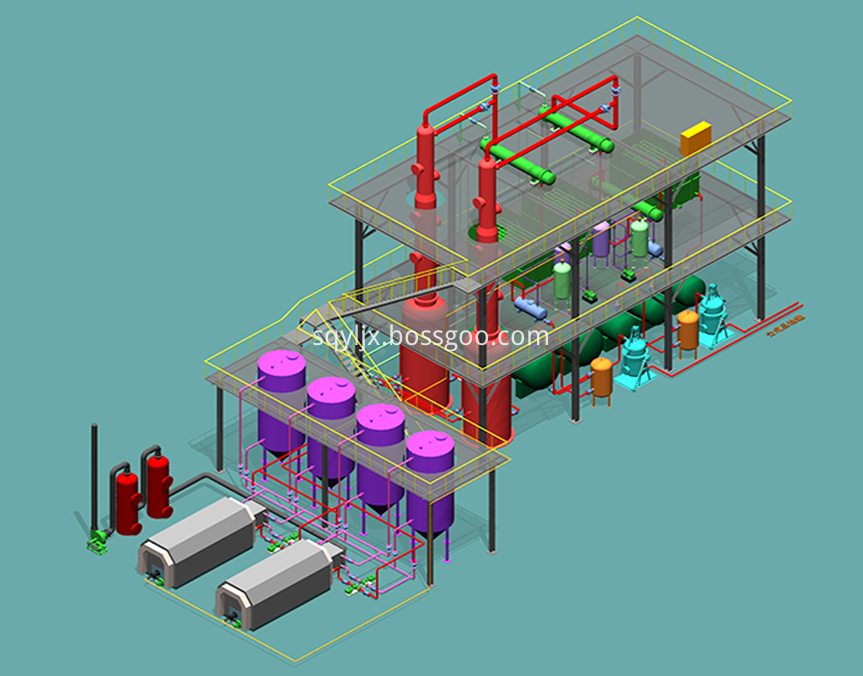

Oil Refinery Machine adopts Pretreatment System, Fractionating Distillation Tower, Atmospheric Distillation Tower, Filter system, so that end production can meet customer requirement.

Oil Refinery Plant we have different designs which  can for customer better choice , here attached the design for toppest configuration.

can for customer better choice , here attached the design for toppest configuration.

can for customer better choice , here attached the design for toppest configuration.

can for customer better choice , here attached the design for toppest configuration.